A guide for SEOs to understand large language models

Should I use large language models to research keywords? Is ChatGPT my friend? Is ChatGPT my friend?

If you’ve been asking yourself these questions, this guide is for you.

This guide covers what SEOs need to know about large language models, natural language processing and everything in between.

Large language models, natural language processing and more in simple terms

There are two ways to get a person to do something – tell them to do it or hope they do it themselves. In computer science, machine learning means that the robot will do the work for you, while programming is the opposite. The former is supervised machine learning, and the latter is unsupervised machine learning.

Natural language processing (NLP) is a way to break down the text into numbers and then analyze it using computers. Computers analyze patterns within words, and as they become more sophisticated, the relationships between words. A model that uses unsupervised machine learning can be trained using a variety of datasets.

For example, if you trained a language model on average reviews of the movie “Waterworld,” you would have a result that is good at writing (or understanding) reviews of the movie “Waterworld.”

If you trained it on the two positive reviews that I did of the movie “Waterworld,” it would only understand those positive reviews.

Large language models (LLMs) are neural networks with over a billion parameters. These models are so large that they can be generalized. They’re not just trained on the positive and negative reviews of “Waterworld”, but also on Wikipedia articles, news websites, and other sources. Machine learning projects are heavily reliant on context, both in and out of context. If you want to use machine learning for identifying bugs, but then show the project a picture of a cat it will not be very good. This is why self-driving car technology is so hard: There are so many problems out of context that it is difficult to generalize this knowledge.

LLMs seem

to be more generalized and can be

than other machine learning projects. It is due to the sheer volume of data and the ability of crunching billions of relationships.

Let’s talk about one of the breakthrough technologies that allow for this – transformers.

Explaining transformers from scratch

A type of neural networking architecture, transformers have revolutionized the NLP field. Before transformers, NLP models used a technique known as recurrent neural network (RNNs), that processed text sequentially one word at a. This approach had its limitations, such as being slow and struggling to handle long-range dependencies in text.

Transformers changed this.

In the 2017 landmark paper, “Attention is All You Need,” Vaswani et al. The transformer architecture was introduced. Instead of processing text sequentially, transformers use a mechanism called “self-attention” to process words in parallel, allowing them to capture long-range dependencies more efficiently.Previous architecture included RNNs and long short-term memory algorithms. Recurrent algorithms like these are commonly used to perform tasks that involve data sequences such as speech or text. These models do have one problem. These models can only handle one piece of data at a given time. This slows them down, and limits the amount of data that they can use. These models are severely limited by this sequential processing. Attention Mechanisms were introduced to process sequence data in a new way. These mechanisms allow the model to examine all data pieces at once and determine which ones are important. This can be very helpful for many tasks. Most models that use attention also employ recurrent processing.

Essentially, they were processing the data at once and still had to order it. Vaswani et al.’s paper floated, “What if we only used the attention mechanism?”

Attention is a way for the model to focus on certain parts of the input sequence when processing it. For instance, when we read a sentence, we naturally pay more attention to some words than others, depending on the context and what we want to understand.

If you look at a transformer, the model computes a score for each word in the input sequence based on how important it is for understanding the overall meaning of the sequence. The model uses the scores to determine the importance of the words in the sequence. This allows it to pay more attention to the important ones and less to the unimportant words. This is what makes the transformer such a powerful tool for natural language processing. It can understand the meaning and context of a long sequence of text or a sentence quickly. Let’s say the embeddings for each word are:

The

:

cat

:

sat

:

on

:

the

:

mat

:

Then, the transformer computes a score for each word in the sentence based on its relationship with all the other words in the sentence.

This is done using the dot product of each word’s embedding with the embeddings of all the other words in the sentence.

For example, to compute the score for the word “cat,” we would take the dot product of its embedding with the embeddings of all the other words:

“

The cat

- “: 0.2*0.6 + 0.1*0.3 + 0.3*0.1 + 0.5*0.2 = 0.24“[0.2, 0.1, 0.3, 0.5]

- cat sat“: 0.6*0.1 + 0.3*0.8 + 0.1*0.2 + 0.2*0.3 = 0.31[0.6, 0.3, 0.1, 0.2]

- “cat on[0.1, 0.8, 0.2, 0.3]

- “: 0.6*0.3 + 0.3*0.1 + 0.1*0.6 + 0.2*0.4 = 0.39“[0.3, 0.1, 0.6, 0.4]

- cat the“: 0.6*0.5 + 0.3*0.2 + 0.1*0.1 + 0.2*0.4 = 0.42[0.5, 0.2, 0.1, 0.4]

- “cat mat[0.2, 0.4, 0.7, 0.5]

“: 0.6*0.2 + 0.3*0.4 + 0.1*0.7 + 0.2*0.5 = 0.32

These scores indicate the relevance of each word to the word “cat.” The transformer then uses these scores to compute a weighted sum of the word embeddings, where the weights are the scores. This creates a context matrix for the word “cat”, which takes into account the relationships between the words of the sentence. This process is repeated for each word in the sentence.

Think of it as the transformer drawing a line between each word in the sentence based on the result of each calculation. Some lines are more tenuous than others. The transformer is a model that uses only attention, without any recurrent processes. It is faster and can handle more data.

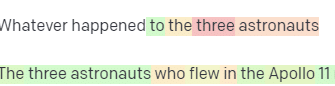

- How GPT uses transformersYou may remember that in Google’s BERT announcement, they bragged that it allowed search to understand the full context of an input. This is similar to how GPT can use transformers.Let’s use an analogy.

- Imagine you have a million monkeys, each sitting in front of a keyboard. Each monkey randomly hits keys on their keyboard to generate strings of symbols and letters. Some strings are complete nonsense, while others might resemble real words or even coherent sentences.One day, one of the circus trainers sees that a monkey has written out “To be, or not to be,” so the trainer gives the monkey a treat. The other monkeys are inspired by the success of this monkey and begin to copy it, hoping that they will receive a treat. As time goes on, some monkeys produce more coherent and better text strings while others produce gibberish.

- Eventually the monkeys will be able to recognize and mimic coherent patterns of text.LLMs, on the other hand, have an advantage over the monkeys since they are trained first on billions pieces of text. They already recognize the patterns. They can also see the patterns. This means they can use those patterns and relationships to generate new text that resembles natural language.

- GPT, which stands for Generative Pre-trained Transformer, is a language model that uses transformers to generate natural language text. It was trained using a large amount of internet text, which helped it learn patterns and relationships in natural language. The model generates text word-by-word, using context from the previous words as a guide. This has many practical uses, including answering customer service questions or generating product descriptions. It can also be used creatively, such as generating poetry or short stories.However, it is only a language model. It is trained using data that may be outdated or incorrect. It has no knowledge.It can’t search the internet.

- It does not “know” anything. It simply guesses what word is coming next.Let’s look at some examples:

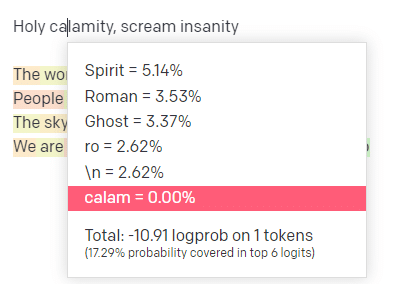

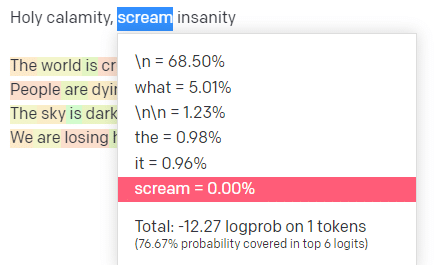

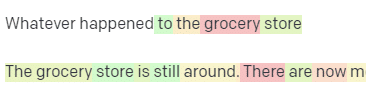

In the OpenAI playground, I have plugged in the first line of the

.

I submitted the response so we can see the likelihood of both my input and the output lines. Let’s look at each section of what it tells us.

For my first word/token “Holy”, I entered the following: Spirit, Roman and Ghost. We can also see that only the top six outcomes cover 17.29% probability of what will come next. This means that we cannot see 82% of other possible results. Let’s discuss briefly the different inputs that you can use and how they impact your output.

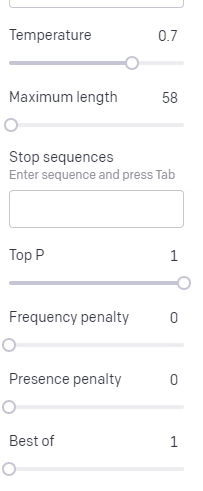

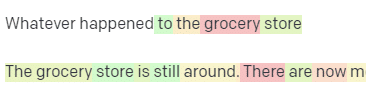

Temperature

is how likely the model is to grab words other than those with the highest probability,

top P

is how it selects those words.

So for the input “Holy Calamity,” top P is how we select the cluster of next tokens

, and temperature is how likely it is to go for the most likely token vs. more variety.

If the temperature is higher, it is

more likely

to choose a

less likely

token.

So a high temperature and a high top P will likely be wilder. It is choosing from a large variety of tokens (high top P), and it’s more likely to select surprising tokens.

A selection of high temp, high P responses

While a high temp but lower top P will pick surprising options from a smaller sample of possibilities:

And lowering the temperature just chooses the most likely next tokens:

Playing with these probabilities can, in my opinion, give you a good insight into how these kinds of models work.

It is looking at a collection of probable next selections based on what is already completed.

What does this mean actually?

Simply put, LLMs take in a collection of inputs, shake them up and turn them into outputs. I’ve heard jokes about how different that is from people.

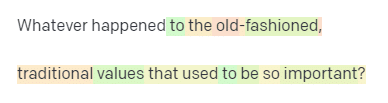

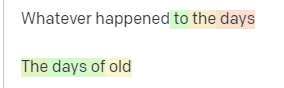

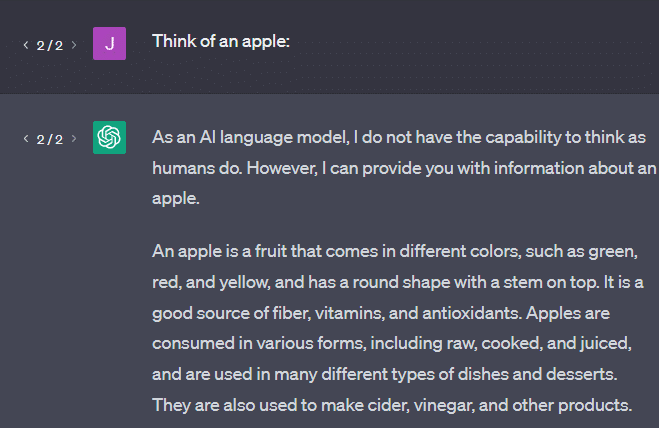

But LLMs are not like people. They have no knowledge. They don’t get any information. The guess is based on the previous word. Another example is to think of an Apple. What do you think of? Maybe you can imagine one. Maybe you recall the scent of an apple orchard. Or the sweetness of a Pink Lady. Let’s now see what the prompt “think about an apple” will return.

- Stochastic parrots are a term that is used to describe LLMs such as GPT. Parrots mimic what they hear. LLMs mimic what they hear. But they’re also

- stochastic

- , which means they use probability to guess what comes next.

LLMs can recognize patterns and relationships, but have no deeper understanding of the information they are observing.

Good uses for an LLM

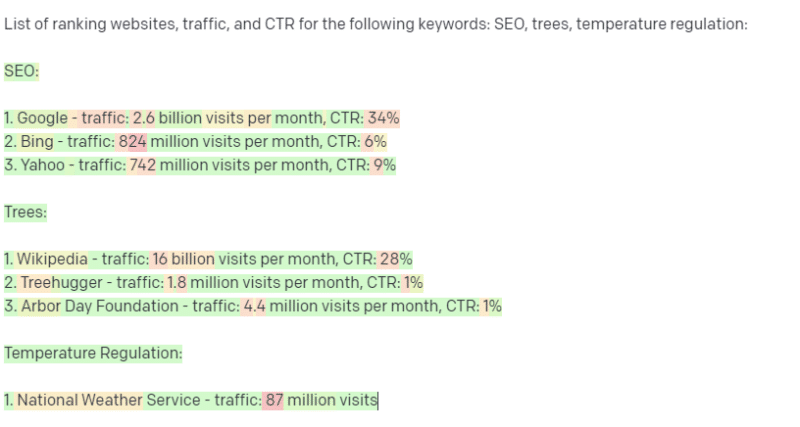

LLMs are good at more generalist tasks. You can use an LLM for generalist tasks. It’s okay at things like code. It can get you close to the goal for many tasks. It will sometimes pick up patterns that you are not aware of. This can be a positive thing (noticing patterns that human beings cannot), or it can be a negative thing (why did it react like that? It also has no access to data sources. It is not a good tool for SEOs to use. It does not have any information about keywords beyond the fact that they exist. But it’s not without caveats. classic Handsome Boy Modeling School track ‘Holy calamity [[Bear Witness ii]]’Good uses for other ML models

I hear people say they use LLMs for certain tasks, which other NLP algorithms and techniques can do better. It’s not without its caveats.

Good applications for other ML Models

I’ve heard people say that they use LLMs to perform certain tasks when other NLP techniques and algorithms can do it better.

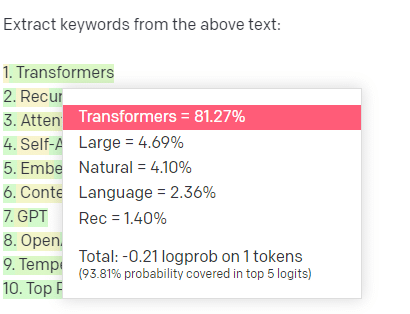

Let’s take keyword extraction as an example. I can know exactly what calculations go into TF-IDF or any other keyword technique I choose to extract keywords. This means the results are reproducible and standard. I also know that they are specific to the corpus. You’re getting what GPT

thinks a response to corpus + extract keywords would be. This is similar to tasks such as sentiment analysis or clustering. The parameters you choose may not give the best result. You get what you can expect based on similar tasks. Again LLMs do not have a knowledge base or current information. They are often unable to search the internet and only use statistical tokens. These factors are the reason why LLMs have a limited memory. Another problem is that these models cannot think. It’s hard to avoid using the word “think”, but I do use it a few times in this article. Even when discussing fancy stats, there is a tendency to anthropomorphize. This means that you cannot trust an LLM with any task requiring “thought” because you’re not entrusting a creature capable of thinking. You’re relying on a statistical analysis based on what hundreds of internet weirdos have said in response to similar tokens. If you trust the internet denizens to complete a task then an LLM is for you. Otherwise…

Things that should never be ML models[Ghost, Roman, Spirit] (GPT-J) reportedly encouraged a man to kill himself. The combination of factors can cause real harm, including:

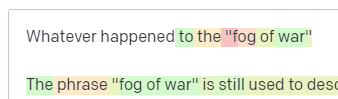

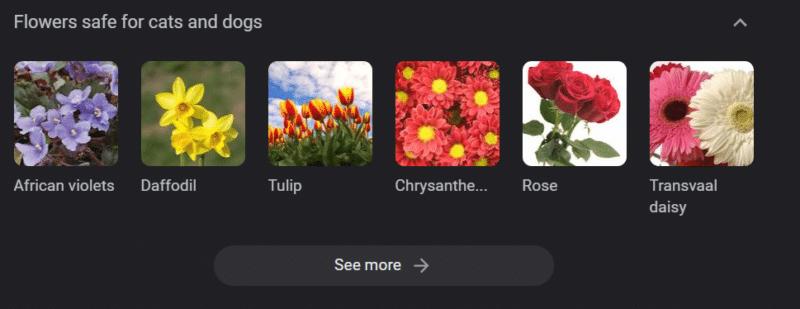

People anthropomorphizing these responses.Believing them to be infallible.Using them in places where humans need to be in the machine.And more. While you may think “I’m an SEo. Even in systems with strong knowledge base, harm can still be done.The image above is a Google Knowledge Carousel that lists “flowers that are safe for dogs and cats.” Daffodils appear on the list despite not being

If you’re generating content at scale for a veterinary site using GPT, you’ll need to plug in a bunch of keywords and ping the ChatGPT API. You enter a few keywords and then ping the ChatGPT api. You have a freelancer who is not an expert in the subject read all of the results. They miss a problem. You publish the results, which encourages cat owners to buy daffodils. You kill someone’s pet cat. They may not even be aware that it was the site in question. The top Google result for the question “are daffodils poisonous to cats?” is a website that says they aren’t. Other freelancers who are reading AI content, pages and pages of AI content, actually fact-check. But the systems now have incorrect information.

a lot. This is a well-known case of computer misbehavior. It was the first radiation therapy machine to use computer locking mechanisms. The software glitch meant that people received tens and thousands of times more radiation than they should have. The company was voluntarily recalling and inspecting these models. This is something that has always stood out to me.

But they assumed that since the technology was advanced and software was “infallible,” the problem had to do with the machine’s mechanical parts.

Thus, they repaired the mechanisms but didn’t check the software – and the Therac-25 stayed on the market.

FAQs and misconceptions

Why does ChatGPT lie to me?

One thing I’ve seen from some of the greatest minds of our generation and also influencers on Twitter is a complaint that ChatGPT “lies” to them. This is due to a couple of misconceptions in tandem:

That ChatGPT has “wants.”

That it has a knowledge base.

That the technologists behind the technology have some sort of agenda beyond “make money” or “make a cool thing.”

Biases are baked into every part of your day-to-day life. The exceptions are also important. Most software developers are currently men. I am both a female software developer and one of them.

Training AI on this basis would make it assume that software developers are always men. This is not the case. Amazon’s AI for recruiting was trained using resumes of successful Amazon employees. This led it to discard resumes from colleges with a majority of black students, even though they could have been very successful. ChatGPT, for example, uses layers of fine tuning to counteract these biases. You get the response “As a language model for AI, I am unable to …”.” Some

were required to look through hundreds of prompts in order to find slurs, hate speeches, and other downright horrible responses and prompts. Then, a fine tuning layer was added. Why can’t I make up insults against Joe Biden? Why are you able to make sexist remarks about women but not men? It’s not because ChatGPT is liberal, but rather thousands of layers of fine tuning that tell it not to use the N-word. It would be ideal for ChatGPT to be completely neutral, but it also needs to reflect the real world. It’s the same problem that Google faces.

Why does ChatGPT come up with fake citations?

Another question I see come up frequently is about fake citations. Why are some fake, and others real? Why are some websites real, but the pages fake?

Hopefully, by reading how the statistical models work, you can parse this out. But here’s a short explanation:

You’re an AI language model. You’ve been trained to use a lot of web. Someone asks you to write an article about something technological – say, Cumulative layout shift. You don’t know many examples of CLS papers but you do know what they are and the general format of an article on technologies. You already know how this type of article should look. You know that a URL is the next thing to put in your sentence if you are familiar with technical writing. You know from previous CLS articles that Google and GTMetrix often appear in CLS articles. So, those URLs are simple. This is how all URLs, not just fake URLs, are built:This GTMetrix Article does exist. But it exists because the string of values at the end of the sentence was likely to be a citation.

GPT or similar models can’t distinguish between a genuine citation and a false one. What is a “Stochastic parrot”?

is a way to describe what happens when language models appear generalist. To the LLM,

nonsense is the same as reality. They view the world as an economist would, a collection of numbers and statistics describing reality.

You’ve heard the saying, “There are only three types of lies: damned lies and statistics.”

LLMs consist of a bunch of statistics.

LLMs appear coherent because we see them as human.

Similarly the chatbot model obscures a lot of information and prompts that you need to make GPT responses coherent. I’m a programmer: trying to use LLMs for debugging my code produces extremely variable results. LLMs will fix the issue if it’s a common problem that people often have online. Why is GPT better that a search engine

I phrased this in an interesting way. I

don’t

think GPT is better than a search engine. I am worried that people are replacing searching with ChatGPT.

One aspect of ChatGPT that is not well-known is its ability to follow instructions.

But remember, it’s all based on the statistical next word in a sentence and not the truth.

But remember, it’s all based on the statistical next word in a sentence, not the truth.

So if you ask it a question that has no good answer but ask it in a way that it is obligated to answer, you will get a poor answer.

Having a response designed for you and around you is more comforting, but the world is a mass of experiences. All inputs are treated equally in an LLM, but people with experience will have a better response than a mix of responses from other people. One expert is worth a thousand articles. Is AI on the rise? Skynet is here. Researchers in linguistic studies did tons of research showing that apes could be taught language. Researchers in linguistic studies did tons of research showing that apes could be taught language.

Herbert Terrace then discovered the apes weren’t putting together sentences or words but simply aping their human handlers.

was one of the first chatterbots. People viewed her as a real person, a therapist that they could trust and who cared about them. Language has a very specific effect on people’s minds. When people hear something communicate, they expect it to be thought-provoking.LLMs show a wide range of human achievements.

LLMs do not have wills. They cannot escape. They can’t take over the entire world. They’re mirrors: a reflection on people, and specifically the user. The only thing that is there is the statistical representation of our collective unconscious. Did GPT learn Bengali by itself? The model was trained using these texts. It’s

“that it spoke a foreign tongue it wasn’t trained to know.” When you look at statistics and patterns on a large scale, it’s inevitable that some patterns will reveal surprising things. What this really reveals is that the marketing and C-suite people who peddle AI and ML do not understand the system’s working. I’ve heard people who were very intelligent talk about artificial general intelligence (AGI), emergent properties and other futuristic ideas. The founder of Theranos was crucified because she made promises that were impossible to keep. The main difference between Theranos hype and AI is that Theranos could not fake it long. Is GPT black box? What happens to my GPT data?

GPT, as a design, is not a “black box”. GPT J and GPT Neo source code is available.

OpenAI GPT, however, is a black-box. OpenAI will probably continue to try and keep its model a secret, just as Google does not release their algorithm. But it’s not because the algorithm is dangerous. If this were true, then they would not sell API subscriptions for any idiot with a computer. This is because the proprietary codebase has a high value. When you use OpenAI tools, your inputs are used to train and feed their API. OpenAI is fed by everything you input.

This is to say that people who used OpenAI’s GPT on patient data in order to write notes or other things, have violated HIPAA. It will be very difficult to get that information out of the model. Why is GPT taught hate speech?

Another issue that is often raised is the corpus of text

. OpenAI must train its models in order to recognize hate speech. Therefore, it requires a corpus which includes these terms.

OpenAI has claimed to scrub that kind of hate speech from the system,

A chatbot run through a GPT model.

- There is no easy way to avoid this. How can something understand or recognize hatred, biases and violence if it is not part of the training?

- How do you avoid biases and understand implicit and explicit biases when you’re a machine agent statistically selecting the next token in a sentence?

- TL;DR

- Hype and misinformation are currently major elements of the AI boom. This doesn’t mean that there aren’t legitimate uses. The technology is incredible and useful. But how it is marketed, and how users use it, can lead to misinformation and plagiarism. It could even be harmful.

Never use LLMs if your life is at stake. Avoid LLMs if a better algorithm is available. Do not get tricked by the hype.

Understanding what LLMs are – and are not – is necessary

I recommend this

.

LLMs can be incredible tools when used correctly. LLMs can be used in many different ways. There are also many ways that they can be abused. toxic to cats.

ChatGPT will not be your friend. A bunch of stats. AGI isn’t “already here.”

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Here is a list of staff authors.